Recently, cinemas, home theater systems and game consoles have undergone a rapid evolution towards stereoscopic representation with recipients gradually becoming accustomed to these changes. Stereoscopy techniques in most media present two offset images separately to the left and right eye of the viewer (usually with the help of glasses separating both images) resulting in the perception of three-dimensional depth. In contrast to these mass market techniques, true 3D volumetric displays or holograms that display an image in three full dimensions are relatively uncommon. The visual quality and visual comfort of stereoscopic representation is constantly being improved by the industry. Digital games allow for intense experiences with their possibilities to provide visually authentic, life-like 3D environments and interaction with the game world itself and other players. Since the release of the Nintendo Wii in 2005 and later Sony Move as well as Microsoft Kinect (both 2010), modern console games use motion control in addition to the classic gamepad. Both the use of these natural user interfaces (NUIs) and stereoscopic representation determine the user experience (UX) with the system. The rise in popularity of these technologies has led to high expectations regarding an added value in entertainment, immersion, and excitement—especially of 3D games—as both technologies are employed to enable richer and deeper media experiences. For the commercial success of these technologies, the resulting UX has to be enjoyable and strain-free. Because this is not always the case, we have to understand the factors underlying the UX of stereoscopic entertainment media and natural user interfaces to improve it further.

In this paper, we review the current state of user experience research on stereoscopic games and the theoretical frameworks underlying it. We further argue that previous research primarily concentrated on direct effects of stereoscopic representation without considering interaction processes between input and output modalities. More specifically, UX should only be enriched if games enable users to meaningfully map mental representations of input (NUIs) and output (stereoscopic representation) space. We will show how the concept of mental models can account for both information channels and present implications for game studies and game design.

User Experience and Games

There are many different approaches to the concept and measurement of UX in games (Komulainen, Takatalo, Lehtonen & Nyman, 2008). UX is often defined as an umbrella term for all qualitative experiences a user has while interacting with a given product, and it reaches beyond the more task-oriented term usability (for an overview, see Bernhaupt, 2010 or Krahn, 2012). The ISO definition of UX focuses on a “user’s perception and responses resulting from the use or anticipated use of a product, system, service or game” (ISO FDIS 9241-210:2010, 2010).

Several other concepts are closely related to UX in games. Terms such as immersion (Murray, 1997; McMahan, 2003), flow (Ciskszentmihalyi, 1975), gameplay (Rollings & Adams, 2003), fun and playability are often used to explain UX from a game design point of view (Bernhaupt, Eckschlager, Tscheligi, 2007), and have been used to evaluate UX. Pietschmann (2009) analyzed further concepts of user experience from other fields of research for their application for UX research, such as presence (Biocca, 1997), cognitive absorption (Agarwal & Karahanna, 2000), gameflow (Sweetser & Wyeth, 2005), engagement (Douglas & Hargadon, 2000), and involvement (Witmer & Singer, 1998). A combined analysis revealed a high degree of consilience that suggests a considerable overlap between the concepts. Due to the multifaceted definition and operationalization of UX, the advancement of theory as well as results suffers from a lack of comparability.

The rise of consumer stereoscopic display technologies poses new challenges to the UX research in games as they claim to increase the visual authenticity. One of the main questions in this context is whether this increased visual authenticity in games automatically leads to an enhanced UX—and if so, what mechanisms exactly constitute this enhanced experience. Another challenge is the measurement of stereoscopic UX in video games.

Describing entertainment experiences based on the concept of (tele)presence has a theoretical and empirical background for the use in the research of interactive media (e.g. Tamborini & Skalski, 2006; Ravaja et al., 2006; Bae et al., 2012). Many studies focused on the measurement of presence, and a broad body of research with questionnaires as well as behavioral and psychophysiological measures exists (for an overview, see Baren & Ijsselsteijn, 2004) that can be employed in the research of UX in stereoscopic games.

Effects of Stereoscopic Representation in Different Media

Stereoscopic displays induce a convergence-accommodation conflict in the user because they present images at a fixed focal length (i.e. the distance to the screen) but vary the object convergence to simulate depth. During the fixation of real world objects both convergence and accommodation are closely linked, but the fixed focal length of a stereoscopic display results in a conflict within our visual system. As a result, viewing stereoscopic images can have negative short-term consequences, including difficulty fusing binocular images and therefore reduced binocular performance (Hiruma, Hashimoto & Takeda, 1996;MacKenzie & Watt, 2010). Consequently, a great deal of research focused on negative effects of stereoscopic displays such as visual discomfort or visual fatigue, and suggested how to avoid them (e.g. Häkkinen, Takatalo, Kilpeläinen, Salmimaa & Nyman, 2009; Tam, Speranza, Yano, Shimono & Ono, 2011; for a review see Lambooij, Ijsselsteijn, Fortuin & Heynderickx, 2009; Rajae-Joordens, 2008 and Howarth, 2011).

These negative effects are part of the concept of simulator sickness (SS) which is established in virtual reality research since the early 1980s (e.g. Frank, Kennedy, Kellogg & McCauley, 1983). It is usually measured via the simulator sickness questionnaire (SSQ; Kennedy, Lane, Berbaum & Lilienthal, 1993). Symptoms of SS have also been identified in studies on stereoscopic gaming. For example, Häkkinen, Pölönen, Takatalo & Nyman (2006) found that after stereoscopic representation of a car racing game, eye strain and disorientation symptoms were significantly elevated compared to non-stereoscopic modes of representation.

However, research also focused on positive effects of stereoscopic representation on UX in different media in order to investigate the industry’s claim of enriched UX. Ijsselsteijn, de Ridder, Freeman, Avons & Bowhuis (2001) studied positive and negative aspects in stereoscopic, non-stereoscopic, still, and moving video conditions. In all conditions, a video with a rally car traversing a curved track at high speed was shown to the participants. The results revealed a significant effect of stereoscopic representation on the subjective judgments of presence, but not on vection, involvement, or simulator sickness. However, they concluded that the presence ratings were more affected by image motion than by the stereoscopic effect.

Rajae-Joordens, Langendijk, Wilinski & Heynderickx (2005) reported similar findings: Experienced gamers played the first-person shooter Quake III: Arena in a stereoscopic and a non-stereoscopic condition. The participants reported increased presence and engagement in the stereoscopic condition but no symptoms of simulator sickness. The authors concluded that stereoscopic representation elicited more intense, realistic experiences, a er feeling of presence and thus a richer UX. Additionally, several studies found that stereoscopy enhances the user’s depth perception and eye-hand coordination in real world scenarios (e.g. McMahan, Gorton, Gresock, McConnell & Bowman, 2006).

Stereoscopic Representation Does Not Automatically Enhance User Experience

Contrary to earlier findings, recent studies found that stereoscopic representation in different media does not automatically improve UX. Takatalo, Kawai, Kaistinen, Nyman & Häkkinen (2011) used a hybrid qualitative-quantitative methodology to assess UX in three display conditions (non-stereoscopic, medium stereo separation, high stereo separation) playing the racing game Need for Speed Underground. They found that the medium and not the high separation condition yielded the best experiences. The authors concluded that the discomfort of stereoscopic representation (due to limitions of stereoscopic technology) is tolerable in the medium separation condition but diminishes the UX in the high separation condition.

Another study from Sobieraj, Krämer, Engler & Siebert (2011) compared experiences of 2D and 3D cinema audiences of the same movie regarding entertainment, presence and immersion. Results revealed that the stereoscopic condition did increase neither the entertainment experience nor positive emotions or the feeling of presence or immersion.

Elson, van Looy, Vermeulen & van den Bosch (2012) conducted three experiments to investigate the effects of visual presentation on UX. In the first study participants played a platform game (Sly 2: Band of Thieves) in standard definition, high definition or 3D condition. In the second study they used a more recent action adventure game (Uncharted 3: Drake’s Deception) with the same viewing conditions. In their third study, Elson and colleagues, in collaboration with a game developer, created a game that requires spatial information procession (3D Pong) and employed the same experimental conditions.

In all three studies the results showed no differences in any variables between the conditions; there was no effect of stereoscopic representation on any measure of UX.

Disparities between studies might be explained by differences in the experimental designs. We already indicated that UX has a broad range of possible measures that might also differ significantly in their sensitivity to stereoscopic representation. Additionally, it is not granted that participants in all studies were provided sufficient time to adapt to the mode of presentation. For the latter case, further longitudinal research is required to assess the user’s shifting perception of and thereby adaptation to stereoscopic representation over time. However, the studies reported above indicate that the sole use of stereoscopic representation might not automatically enhance the UX—i.e. the rule of thumb “the more, the better” does not seem to apply here.

Meaningful Relation of Content, Input, and Output

One flaw of research on stereoscopic media is the fact that researchers, due to the notion of an omnibus-effect, often did not focus on the underlying mechanisms, how stereoscopic representation would enrich UX. We argue that the lack of change in UX with stereoscopic representation in previous studies can be explained by the concept of mental interaction models and the related cognitive processes during gameplay. To successfully enrich UX, games have to create a meaningful relation between stereoscopic representation, input modality and the type of task that players have to fulfill. Therefore, we should not consider stereoscopic representation merely as an attribute of games on its own, but as an attribute that is closely tied to other attributes of the medium in which it is implemented.

First, we argue that UX can only be considerably enhanced by stereoscopic representation if users can interact via natural input devices within the same three-dimensional space they visually perceive. The implementation of both technologies facilitates the user’s construction of a mental interaction model by mapping the space of the virtual environment to the real space where the player performs actions. Second, this spatial mapping of input and output modalities should only matter if it is relevant to the task users have to fulfill and the according type of action users perform, respectively.

Mental Models and Their Applications

The concept of mental models originates from cognitive psychology and its precursors (e.g. Craik, 1943; Johnson-Laird, 1983) as a means to explain our understanding of different complex entities that we experience, such as situations, processes, and relations between objects 1. In general, mental models can be regarded as preliminary cognitive schemata that are not yet fully learnt but are under construction. The concept of mental models evolved from the idea that we initially do not fully comprehend perceived entities, but have to construct our understanding through experiences. Therefore, understanding can only be a result of a constant update of a mental model based on new information and the model’s prior state.

The general form of a mental model receives information input from two sources. First, when we construct mental models about a new entity, we do not build models from scratch because we implement existing experiences or knowledge from other domains that we deem helpful (top-down processing). When we see a smashed bottle of water next to a table, we assume that some force caused the bottle to move and that gravity let it fall. We could further assume that our cat was the force that initially caused the mess, because she had done so twice already. We thereby systematically draw from previous knowledge (top-down) in order to reconstruct the event via a mental model. Second, mental models are constructed for a specific entity that deviates from similar entities that we referred to in top-down processing. We therefore look for and implement information from specific events itself (bottom-up processing). The fact that the bottle of water is broken and located on the floor next to a table indicates that a specific event has happened, i.e. the bottle dropped. This information caused top-down processing, which relates the event to other situations, such as when things drop from the kitchen table. However, upon further examination of the scene we realize that our son is standing at the other side of the room, ashamedly looking down. This new information causes a major modification of our model (bottom-up), i.e. the causation of the event is substituted. Because mental models are typically constructed over time according to the information available, other relevant prior knowledge and new information is implemented into the model to improve its effectiveness with the goal to achieve a good model fit. In our example, we might ask why the son smashed the bottle of water—was it bad luck, because he did not pay attention to the table (bottom-up), or did he again argue with his brother resulting in the accident (top-down)? Both bottom-up and top-down processing are at the core of human cognition and are utilized in every situation that involves perception. Accordingly, both types of processing as well as the construction of mental models as higher cognitive instances are automated processes that do not require conscious processing and only through the combination of both types of processing, mental models can gain accuracy over time.

Mental models serve as a tool allowing for interaction within the real world without initially having to fully understand every element—they allow us to model the real world. Once we have an initial model of a given entity, we can make assumptions about possible outcomes of interactions with the entity and test the assumption against the real world outcome. The deviation between predicted and observed outcome serves as an indicator as to whether the mental model suffices or how it can be further improved. This way we can simply simulate our environment with ever-increasing complexity to achieve better understanding. However, mental models are processed in our working memory and are thereby subject to our working memory’s processing capacity. Therefore, complexity reaches its limits when the model requires more mental resources than are available. The model is then no longer efficient as a means to simulate our environment. Consequently, simple models that do not rely on detailed parameters allow us to simulate entities despite our cognitive processing limitation. Thus it is important to keep models simple and reduce their complexity to a necessary number of components that can still be handled by our processing capacity.

Within the context of media reception research, a similar concept has been used by Kintsch & van Dijk (1978) to explain a reader’s understanding of texts. In this case the amount of available information is limited to the aspects that are mentioned in the text. In order to understand an event the user has to rely on prior knowledge to fill the information gaps within the text. Kintsch & van Dijk argued that readers build a proposition network from textual information and preexisting propositions to represent the situation of a text. The concept of mental models was also applied to film studies (Ohler, 1994) in an effort to explain the viewer’s understanding of film narratives, in this case called situation model. As with the case of written texts, movies often do not provide the recipient with all necessary information required to understand events. On the contrary, detective stories often suggest information pieces that recipients implement into their situation model because they deem reasonable, thereby manipulating the recipients understanding of the narrative in order to create suspenseful entertainment experiences (Ohler & Nieding, 1996). Whenever new information becomes available through the detective’s investigation, our model is updated.

Spatial Information in Player-Game Interaction

In our effort to explain effects of stereoscopic representation on UX by a mental interaction model, we first have to define, which information is represented within the model and why the interaction between player and game is an important process for a seemingly mere perceptual phenomenon. Interaction is often described as the fundamental component of the gaming experience (Crawford, 2003; Salen & Zimmermann, 2004; Zimmermann, 2004) that elevates games to a new type of media distinct from books or films. In addition to the narrative that is carried out within the game, we can manipulate the virtual environment to a certain degree in order to advance the narrative 2 by our actions. Through this interaction our perspective on the narrative shifts from an observer to an actor whose actions determine the narratives outcome (Aarseth, 1997).

Interacting with game systems requires at least one channel for each input and output of information. To understand the cognitive prerequisites of interaction models we first have to identify the type of information that is carried within each channel. Second, as we want to understand stereoscopic representation—a spatial phenomenon—we have to identify each channel’s relation to spatial information.

In terms of input, games provide different types of game controllers, such as gamepads, mouse, keyboard, or motion sensitive controllers. The buttons of a controller are generally linked to a specific action in the game. However, for some actions the mapping between controller and game action is mediated by an additional input layer within the GUI. In the latter case, the action is not activated by a specific button on the controller as the button only executes an action that is linked to some GUI element 3. To understand the effects of interaction on UX, we should not utilize a simplified definition of interaction between real world action and in-game consequence. Instead, we should analyze each input layer separately as each input action differs in the way it is related to in-game actions. In terms of output, games use several information channels to convey feedback, i.e. visual, auditory, and haptic information. In this paper we focus on visual feedback and, more precisely, on the effects of stereoscopic versus non-stereoscopic representation on the interaction process and thus on UX.

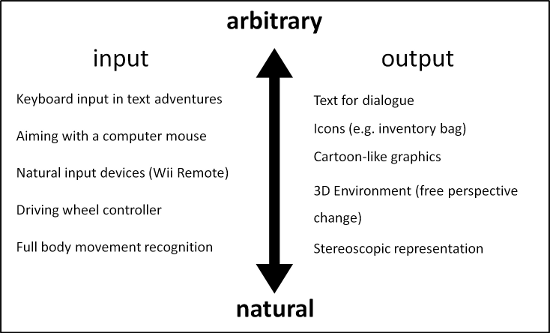

Each information channel that is used in the interaction process can be classified according to its perceived naturalness compared to the real world on a continuum 4 between arbitrary and natural (see figure 1).

Continuum of perceived naturalness of input and output information channels in games

Arbitrary input information is common in games and often prevents players from having to input a complex series of button-combinations in order to perform an action. Instead of having to swing a sword and block an opponent’s strikes by moving the sword via the gamepad’s analog stick, we are given one button for each action. Despite the increased perceptual naturalness of analog stick movements compared to sword movements by mapping the directionality of movement, this type of input would greatly increase input complexity and thereby the game’s difficulty. Accordingly, arbitrary input facilitates UX by reducing input complexity, so that the player can perform relevant actions without much effort and can focus his attention on more relevant entities (e.g. strategic decisions). Only recently with modern gaming consoles has technology enabled players of mass marketed games to use input devices with greater naturalness that are sensitive to the player’s movement. Although other types of natural input devices have been available before (e.g. steering wheels or microphones), they are only applicable for specific types of action whereas the recent generation of input devices supports a wide range of possible actions.

Nonetheless, input devices such as steering wheels, drum sets or microphones require almost exactly the same movements that are necessary for driving cars, playing drums or singing a song. In this case the motion performed by the player can be transferred directly into the game. However, the more intensely an input device is used for different input actions (e.g. playing tennis or swinging a sword), the more the input information has to be interpreted by the game in order to reach a robust means of interaction.

Because all these movements represent directionality, rotation, acceleration, and speed in a three-dimensional space, this type of input can be regarded as fairly natural according to the degree a game interprets the input information. As a consequence of the increased naturalness of input, players have to focus their attention on the input action itself to a greater degree because they have to coordinate their movements according to the desired consequence in the game. Arbitrary input devices simplify the interaction process by reducing rather complex actions to a single button press. Natural input devices, however, often force the player 5 to perform an action as it should appear in the game. The learning process of an interface is therefore more demanding when we have to learn motoric skills instead of simple button mappings. This sensomotoric experience should result in a very different gameplay experience.

In terms of naturalness of visual output, games usually provide both arbitrary and more natural information. Arbitrary information is used for numerical feedback (e.g. the amount of experience points required to reach the next level) or in the form of symbols that convey relevant game information (e.g. button symbols in quick time events). The degree to which visual output is rendered naturally depends on the respective game: Early game engines were not able to represent game elements realistically. Over the past ten years, however, game engines constantly gained visual fidelity with some games getting close to the visual quality of films. The latter is especially true for games that utilize the first-person perspective, usually allowing free movement and free perspective change. Therefore, visual representation of those games is highly developed in terms of object shape, object movement, texture, and lighting quality, and can be regarded as a highly natural type of representation. Additionally, players gain an impression of the three-dimensional quality of the virtual environment via monocular depth cues, such as object size, perspective, and movement speed while moving around objects. However, they do not fully perceive spatial depth, but utilize monocular depth cues. Only with stereoscopic display technology can players additionally utilize binocular depth cues to perceive actual spatial depth. Games that allow stereoscopic representation can therefore present visual output with a higher visual fidelity than games that merely rely on a three-dimensional virtual game world that is reduced to a two-dimensional representation.

Recent research investigated if this assumed difference in naturalness of output had an effect on UX. The inconclusive results may be a result of at least three circumstances: (1) there is no effect; (2) there are other variables that mediate the effect; or (3) there is no difference in perceived naturalness in the first place. With the help of interaction models, we can explain that prior research might be subject to a combination of (2) and (3): we argue that there is an effect if a given system constructs a meaningful relation between stereoscopic output, natural input and type of task. Therefore, an effect should exist, if a game accounts for a natural type of input and tasks that rely on interaction in a three-dimensional space (2). However, if a game only provides natural input devices and stereoscopic representation, but spatial depth is not relevant to the task a player fulfills, effects of stereoscopic representation should only exist as a short-term sensation due to the new kind of experience. Additionally, it should have no effect in extended gaming sessions, because it is not relevant to the game. In the latter case, other experiences superimpose the impression of spatial depth and stereoscopic representation should be perceived as just as natural as non-stereoscopic representation (3), given that the player is not forced to compare both conditions.

Mental models of interaction and Spatial Mapping

For stereoscopic representation to positively affect UX, the additional information this technology provides, i.e. binocular spatial depth cues, has to be relevant to the gaming experience. Only when spatial depth cues are at the core of the game mechanics can they influence UX in extended gaming sessions. Because interactivity is regarded as the central attribute of digital games, spatial depth cues would have to be relevant to the interaction between player and game. It contains at least two information channels that flow into opposite directions; therefore, not only should display technology present spatial depth cues, but input devices should also be allowed to input spatial depth into the system. However, as spatial depth is not relevant to the gaming experience per se, it should be enforced by the player’s tasks. In this case, processing spatial depth information should considerably determine the player’s success and thereby focus the player’s attention to some extent on spatial depth; it becomes relevant to the way the player interacts with the game.

We argue that whenever players interact with a game for the first time, they construct a mental model of the interaction process because games differ intensely in the way different input actions are linked to specific game events. One might counter that experienced players already possess an elaborated model of how to interact with a game because many games rely on conventional mappings of controller buttons (e.g. analog sticks for movement and perspective control). However, there are still actions that are not subject to conventional controller mapping. Additionally, even if the general functionality of a button is intuitive via its conventionality, the specific outcome as well as the required timing and rhythm of button presses still differ between games. Eventually, players will have to learn basic interaction principles for each game by constructing an interaction model and improve it by game experience. This fact also becomes evident by the tutorial phase that is carried out at the beginning of almost every game, where players learn the basics of game control and game mechanics respectively. In the case of natural input devices, the learning process can become rather difficult, as input action gain complexity because of the required input of motoric action.

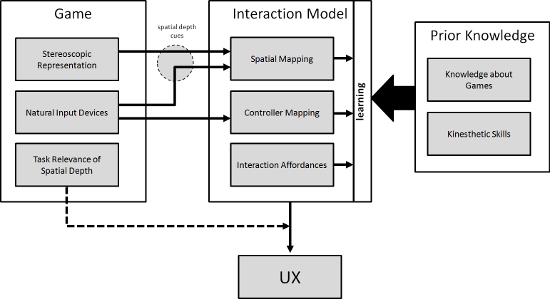

Just as other mental models, interaction models are constructed in a combined bottom-up and top-down process, i.e. the model draws from both prior knowledge and experience during gameplay. Experienced players should benefit from interaction models of other games and should therefore learn more quickly during the actual interaction with the game. In addition to controller mappings, interaction models represent at least two other types of information. First, they model the interaction affordances of a game, which can be regarded as a set of possible actions that allow the player to manipulate the game world (e.g. moving boxes or turning on a radio). Accordingly, players do not know initially which interaction affordances are present within the game, but have to identify them throughout the game.

Second, because both input and output modalities convey spatial information, the player faces the problem of multiple spaces with the gaming environment and the living room being separated from each other. The player would have to understand how the space she is physically located in is related to the space she perceives visually. For example, in Wii Sports/Tennis (Nintendo, 2006), both spaces are fairly independent of one another: A forehand swing does not require the player to swing his racket from back to front in an upward movement—the player could also just perform a short movement in any direction. Both spaces are not linked one to another; the only information that is gathered from the controller is the amount of acceleration and the timing of a swing. In this scenario spatial depth cues would not be relevant to the interaction and, therefore, not affect the UX. Spatial depth only becomes important when both spaces are closely linked, a state that we refer to as spatial mapping. In this case, the game environment becomes part of the player’s living room and vice versa, i.e. she is perceptually located within the virtual environment she interacts with, which might be referred to as an intense feeling of spatial presence (e.g. Tamborini & Skalski, 2006). Here, the player’s interaction model would suggest that their movements in the real world space are consistent with movements in the virtual world. The extreme state of spatial mapping is presented by the Holodeck technology in Star Trek: The Next Generation (Berman, 1987-1994). In this fictional VR, each element of the virtual environment can be interacted with directly. But even real VR installations can achieve a similar perceptual phenomenon, where each set of coordinates of the virtual world is mapped to a set of coordinates in the real world. Players can then use natural input to manipulate the object at its actual position. A tennis game could therefore project the ball’s position into the space in which the player is located and track their precise movement to determine whether they hit the ball or not. Of course, this high level of spatial mapping would require the game to track the player’s head and controller position to display the player’s perspective correctly.

Games that provide a high degree of spatial mapping stress the players processing of spatial depth cues as they are relevant to his success and could therefore affect UX quite intensely (see Fig. 2).

Construction of the interaction models and their relation to UX

Arguably, only spatial mapping allows natural input devices to reach their full potential: possible input actions can gain a high degree of complexity due to the high spatial resolution the devices track and the spatial validity of the movements in the virtual space as a consequence of spatial mapping. Consequently, the training procedure for the interaction model for this type of input would increase intensely. However, over time players develop automated motor programs in a similar way they learn gear shifting in driving school. These motor programs can trigger complex motor actions that have been trained repeatedly. Once these motor programs have reached a sufficient precision, the player’s UX can benefit greatly from the increased complexity of input actions Due to the fact that the player’s real actions are responsible for a positive outcome of the game, she experiences a higher degree of perceived self-efficacy (Klimmt & Hartmann, 2006) compared to other games with arbitrary input mappings. Thereby, stereoscopic representation can further improve UX by raising the effectiveness of natural input devices.

Discussion

The rise of new technologies in computer games has always been a mixed blessing, since it always takes a lot of time until the new technology is mastered. Consumers have often been used as test audiences, paying for half-baked products. When 3D graphics first arrived, many games just used it because it was available. But it was not meaningfully implemented into the gameplay and so players did not gain any additional value. A similar observation can be made concerning stereoscopic technology in movies and games for the reasons mentioned above. We argue that with a theoretical understanding and systematic implementation, stereoscopic representation can not only enrich UX, but also deliver new types of entertainment software for the consumer market. To achieve this, the additional information conveyed through stereoscopy (i.e. binocular spatial depth cues) has to be relevant to the tasks users perform within the game. Without implementing spatial information into the game mechanics, stereoscopy will always be just a gimmick without real consequences for UX. Additionally, the mapping of input and output spaces results in a higher degree of self-efficacy of the player and thus can enrich the UX. But in the end, we don’t want to discuss what is and is not fun for some players as the spatial mapping allows for other forms of UX that each player does not necessarily perceive as more fun. One reason we play games is to escape our everyday life and to have adventures we cannot have in real life. A high spatial mapping (e.g. in an action game) can be more work then relaxation for the player and may not be in the interest of particular game design concepts. Game designers should therefore use it wisely to make a good game.

To empirically support our argument of spatial mapping, we first need the adequate software products. As stated above, current games do not fulfill this requirement. To create according games, the designers have to consider constraints of the stereoscopic technology as well as user acceptance. However, it is likely that technological parameters have to be adjusted to the given game mechanics or game tasks. An iterative design approach with exhaustive testing is advisable for designers since the balance between content, interaction possibilities and visualization of the gameplay is expected to be delicate. Since complex inputs might overstrain the user, games should, at least at the beginning, require rather simple interactions to successfully enhance gaming experience through the use of stereoscopic representation. For example, the player’s task could be the manipulation of one moving object at a time. This kind of gameplay would not only help players to gradually get used to the technology, but it would also facilitate behavioral measures as well as the setting up of experiments with easy manipulation of all relevant parameters of the software.

Given the availability of games suitable for research, it is still not simple to measure the crucial variables because they have yet to be identified. General constructs like UX, presence, immersion and others have shown to be too unfocused or overlapping, and may therefore be considered as covariates, if anything. Even though the aforementioned constructs can somehow be related to the experience during gameplay, we think this issue has to be further specified. We argue that players benefit from games that implement stereoscopic technology if they provide a higher degree of entertainment and amusement compared to games with a comparable gameplay in terms of game tasks and interactions. However, there is not yet a standardized method for measuring the entertainment value of a game. We suggest the proven combination of three methods: self-reporting, observation and psychophysiological measurement. Furthermore, other relevant variables and approaches have to be considered that can influence the UX during gameplay. Previous studies did not take into account that players may gradually get used to the stereoscopic technology during repeated exposure. As a consequence, simulator sickness or other negative effects could disappear or at least be reduced by a large amount. This would cause players to become aware of the benefits of the new technology that may be distracting or bothersome upon the first interaction or short-term usage.

– All images belong to their rightful owner. Academic intentions only.-

References

Aarseth, E. (1997). Cybertext. Perspectives on ergodic literature. Baltimore, MD: Johns Hopkins University Press.

Agarwal, R., & Karahana, E. (2000). Time flies when you’re having fun: Cognitive absorption and beliefs about information technology usage. MIS Quarterly, 24(4), pp. 665–994, doi 10.2307/3250951

Bae, S., Lee, H., Park, H., Cho, H., Park, J., & Kim, J. (2012). The effects of egocentric and allocentric representations on presence and perceived realism: Tested in stereoscopic 3D games. Interacting with Computers, 24(4), pp. 251–264, doi 10.1016/j.intcom.2012.04.009

Baren, J. V., & IJsselsteijn, W. (2004). Measuring presence: A guide to current measurement approaches. Retrieved from http://ispr.info/about-presence-2/tools-to-measure-presence/ispr-measures-compendium/

Berman, R. (Producer). (1987-1994). Star Trek: The Next Generation [DVD].

Bernhaupt, R. (Ed.). (2010). Evaluating user experience in games. London, UK: Springer.

Bernhaupt, R., Eckschlager, M., & Tscheligi, M. (2007). Methods for evaluating games. In M. Inakage, N. Lee, M. Tscheligi, R. Bernhaupt, & S. Natkin (Eds.) Proceedings of the international conference on advances in computer entertainment technology – ACE ‘07 (pp. 309-310). New York, NY: ACM Press. doi:10.1145/1255047.1255142

Biocca, F. (1997). The cyborg’s dilemma: Progressive embodiment in virtual environments. Journal of Computer Mediated Communication, 3(2). Retrieved from http://jcmc.indiana.edu/vol3/issue2/biocca2.html

Craik, K. (1943). The nature of explanation. Cambridge University Press.

Crawford, C. (2003). On game design. San Francisco: New Riders.

Csikszentmihalyi, M. (1975). Beyond boredom and anxiety: Experiencing flow in work and play. San Francisco: Jossey-Bass.

Douglas, Y., & Hargadon, A. (2000). The pleasure principle: Immersion, engagement, flow. In F. M. Shipman, P. J. Nürnberg, & D. L. Hicks (Eds). Proceedings of the eleventh ACM on Hypertext and hypermedia (pp. 153–160). San Antonio, TX: ACM. doi 10.1145/336296.336354

Elson, M., van Looy, J., Vermeulen, L., & Van den Bosch, F. (2012, September). In the mind’s eye: No evidence for an effect of stereoscopic 3D on user experience of digital games. Paper presented at the ECREA ECC 2012 preconference Experiencing Digital Games: Use, Effects & Culture of Gaming, Istanbul, Turkey.

Frank, L. H., Kennedy, R. S., Kellogg, R. S., & McCauley, M. E. (1983). Simulator sickness: A Reaction to a transformed perceptual world: I. Scope of the problem. Proceedings of the Second Symposium of Aviation Psychology, Ohio State University, Columbus OH, 25-28.April.

Häkkinen, J., Pölönen, M., Takatalo, J., & Nyman, G. (2006, September). Simulator sickness in virtual display gaming: A comparison of stereoscopic and non-stereoscopic situations. Paper presented at the 8th International Conference on Human Computer Interaction with Mobile Devices and Services, Helsinki, Finland.

Häkkinen, J., Takatalo, J., Kilpeläinen, M., Salmimaa, M., & Nyman, G. (2009). Determining limits to avoid double vision in an autostereoscopic display: Disparity and image element width. Journal of the Society for Information Display, 17(5), pp. 433-441. doi:10.1889/JSID17.5.433

Hiruma, N., Hashimoto, K., & Takeda, T. (1996). Evaluation of stereoscopic display task environment by measurement of accommodation responses. Engineering in Medicine and Biology Society, 1996. Bridging Disciplines for Biomedicine. Proceedings of the 18th Annual International Conference of the IEEE, (pp. 672–673).

Howarth, P. A. (2011). Potential hazards of viewing 3-D stereoscopic television, cinema and computer games: A review. Ophthalmic & Physiological Optics: The Journal of the British College of Ophthalmic Opticians (Optometrists), 31(2), pp. 111–122. doi:10.1111/j.1475-1313.2011.00822.x

ISO FDIS 9241-210:2010. (2010). Ergonomics of human-system interaction – Part 210: Human-centered design for interactive systems. Retrieved from http://www.iso.org/iso/iso_catalogue/catalogue_tc/catalogue_detail.htm?csnumber=52075

Johnson-Laird, P. N. (1983). Mental models: Towards a cognitive science of language, inference, and consciousness. Cambridge, MA: Hardvard University Press.

Johnson-Laird, P. N. (2004). The history of mental models. In K. Manktelow & M.C. Chung (Eds.), Psychology of reasoning: Theoretical and historical perspectives (pp. 179-212). New York, NY: Psychology Press.

Juul, J. (2001). Games telling stories? Game Studies. The Interantional Journal of Computer Game Research, 1(1). Retrieved from http://www.gamestudies.org/0101/juul-gts/

Kennedy, R. S., Lane, N. E., Berbaum, K. S., & Lilienthal, M.G. (1993). Simulator Sickness Questionnaire: An enhanced method for quantifying simulator sickness. The International Journal of Aviation Psychology, 3(3), pp. 203–220. doi:10.1207/s15327108ijap0303_3

Kintsch, W., & van Dijk, T. A. (1978). Toward a model of text comprehension and production. Psychological Review, 85(5), pp. 363-394. doi:10.1037/0033-295X.85.5.363

Klimmt, C., & Hartmann, T. (2006). Effectance, self-efficacy, and the motivation to play video games. In P. Vorderer, & J. Bryant (Eds.), Playing video games: Motives, responses, and consequences (pp. 132-145). Mahwah, NJ: Lawrence Erlbaum.

Komulainen, J., Takatalo, J., Lehtonen, M., & Nyman, G. (2008). Psychologically structured approach to user experience in games. In K. Tollmar, & B. Jönsson (Eds.). Proceedings of the 5th Nordic conference on Human-Computer Interaction building bridges – NordiCHI ‘08. (pp. 487-490). New York; NY: ACM Press. doi:10.1145/1463160.1463226

Krahn, B. (2012). User Experience: Konstruktdefinition und Entwicklung eines Erhebungsinstruments [User Experience: Construct definition and measure development]. Bonn, Germany: GUX.

Lambooij, M., Ijsselsteijn, W., Fortuin, M., & Heynderickx, I. (2009). Visual discomfort and visual fatigue of stereoscopic displays: A review. Journal of Imaging Science and Technology, 53(3), doi:10.2352/J.ImagingSci.Technol.2009.53.3.030201

Lombard, M., & Ditton, T. (1997). At the heart of it all: The concept of presence. Journal of Computer Mediated Communication, 3(2). Retrieved from http://jcmc.indiana.edu/vol3/issue2/lombard.html. doi: 10.1111/j.1083-6101.1997.tb00072.x

MacKenzie, K. J., & Watt, S. J. (2010). Eliminating accommodation-convergence conflicts in stereoscopic displays: Can multiple-focal-plane displays elicit continuous and consistent vergence and accommodation responses? SPIE Proceedings Vol. 7524. Stereoscopic Displays and Applications. doi:10.1117/12.840283

McMahan, A. (2003). Immersion, engagement, and presence. A method for analyzing 3-D video games. In M.J. Wolf, & B. Perron. (Eds.), The video game theory reader (pp. 67–87). New York, NY: Routledge.

McMahan, R. P., Gorton, D., Gresock, J., McConnell, W., & Bowman, D. A. (2006). Separating the effects of level of immersion and 3D interaction techniques. In M. Slater, Y. Kitamura, A. Tal, A. Amditis, & Y. Chrysanthou (Eds.), Proceedings of the ACM Symposium on Virtual Reality Software and Technology (pp. 108-111). New York, NY: ACM. doi: 10.1145/1180495.1180518

Murray, J. (1997). Hamlet on the holodeck: The future of narrative in cyberspace. Cambridge, MA: MIT Press.

Ohler, P. (1994). Kognitive Filmpsychologie. Verarbeitung und mentale Repräsentation [Cognitive psychology of movies. Processing and mental representation of narrative movies]. Münster, Germany: MAkS-Publikationen.

Ohler, P., & Nieding, G. (1996). Cognitive modeling of suspense-inducing structures in narrative films. In P. Vorderer, H. J. Wulff & M. Friedrichsen (Eds.), Suspense conceptualizations, theoretical analyses and empirical explorations (pp. 129-147). Hillsdale, NJ: Lawrence Erlbaum.

Pietschmann, D. (2009). Das Erleben virtueller Welten. Involvierung, Immersion und Engagement in Computerspielen [Experiencing virtual worlds. Involvement, Immersion and Engagement in computer games]. Boizenburg, Germany: VWH

Rajae-Joordens, R. J. (2008). Measuring experiences in gaming and TV applications. In J.H. Westerink, M. Ouwerkerk, T.J. Overbeek, W.F. Pasveer, & B. Ruyter (Eds.), Probing experience. From assessment of user emotions and behaviour to development of products. (Vol. 8, pp. 77-90). Dordrecht, Netherlands: Springer.

Rajae-Joordens, R. J., Langendijk, E., Wilinski, P., & Heynderickx, I. (2005, December). Added value of a multi-view auto-stereoscopic 3D display in gaming applications. Paper presented at the 12th International Display Workshops in conjunction with Asia Display. Takamatsu, Japan.

Ravaja, N., Saari, T., Turpeinen, M., Laarni, J., Salminen, M., & Kivikangas, M. (2006). Spatial presence and emotions during video game playing: Does it matter with whom you play? Presence: Teleoperators and Virtual Environments, 15(4), pp. 381–392. doi:10.1162/pres.15.4.381

Rollings, A., & Adams, E. (2003). Andrew Rollings and Ernest Adams on game design. San Francisco; CA: New Riders.

Sachs-Hombach, K. (2005). Die Bildwissenschaft zwischen Linguistik und Psychologie [Image studies between linguistics and psychology]. In S. Majetschak (Ed.), Bild-Zeichen [Image signs] (pp. 157-178). Munich, Germany: Wilhelm Fink.

Salen, K., & Zimmermann, E. (2004). Rules of play. Game design fundamentals. Cambridge, MA: MIT Press.

Skalski, P., Tamborini, R., Shelton, A., Buncher, M., & Lindmark, P. (2010). Mapping the road to fun: Natural video game controllers, presence, and game enjoyment. New Media & Society, 13(2), pp. 224–242. doi:10.1177/1461444810370949

Smith, E.R., & Queller, S. (2001). Mental Representations. In Tesser, A. & Schwarz, N. (Eds.). Blackwell handbook of social psychology: Intraindividual processes (pp. 391–445). London, UK: Blackwell Publishers.

Sobieraj, S., Krämer, N.C., Engler, M., & Siebert, M. (2011, October). The influence of 3D screenings on presence and perceived entertainment. Paper presented at the International Society for Presence Research Annual Conference, Edinburgh, Scotland.

Sweetser, P., & Wyeth, P. (2005). GameFlow: A model for evaluation player enjoyment in games. ACM Computers in Entertainment, 3(3), pp. 1–24. doi: 10.1145/1077246.1077253

Takatalo, J., Kawai, T., Kaistinen, J., Nyman, G., & Häkkinen, J. (2011). User experience in 3D stereoscopic games. Media Psychology, 14(4), pp 387–414. doi: 10.1080/15213269.2011.620538

Tam, W. J., Speranza, F., Yano, S., Shimono, K., & Ono, H. (2011). Stereoscopic 3D-TV: Visual comfort. IEEE Transactions on Broadcasting, 57(2), 335–346. doi:10.1109/TBC.2011.2125070

Tamborini, R., & Skalski, P. (2006). The role of presence in the experience of electronic games. In P. Vorderer, & J. Bryant (Eds.), Playing video games: Motives, responses, and consequences (pp. 225–240). Mahwah, NJ: Lawrence Erlbaum Associates Publishers.

Witmer, B., & Singer, M. (1998). Measuring presence in virtual environments: A presence questionnaire. Presence: Teleoperators & Virtual Environments, 7(3), pp. 225–240. doi: 10.1162/105474698565686

Zimmermann, E. (2004). Narrative, interactivity, play and games: Four naughty concepts in need of discipline. In N. Wardrip-Fruin, & P. Harrigan (Eds.), First Person: New media as a story, performance, and game (pp. 154-164). Cambridge, MA: MIT Press.

Ludography

3D Pong, GriN, Germany, 2011

Need for Speed Underground, Electronic Arts Black Box, Canada, 2003.

Quake III: Arena, id Software, USA, 1999.

Sly 2: Band of Thieves, Sucker Punch Productions, USA, 2010.

Uncharted 3: Drake’s Deception, Naughty Dog, USA, 2011.

Wii Sports, Nintendo, Japan, 2006.

– All images belong to their rightful owner. Academic intentions only.-

- For a detailed historical overview of the concept of mental models, see Johnson-Laird (2004). ▲

- The term narrative should be understood in its broadest sense here. We regard player action to be part of the narrative, while other authors limit the term narrative to the plot of the game. For a discussion see Juul (2001). ▲

- As an example a button could be used to let the player interact with the environment. This way the button is not responsible for the player opening a door or speaking to a NPC, but the player’s selection of a door or an NPC by moving towards it. The interact button then only executes an interaction affordance of a GUI element. ▲

- This continuum has already been reported by Sachs-Hombach (e.g. 2005) to categorize media, especially visual media, according to their perceptual fidelity. ▲

- It should be mentioned that in some cases the player forces herself, because she overestimates the required accuracy of the controller movement to perform an action in the game. In Wii Sports Tennis for example, a simple controller movement is equally successful as a fully exercised service movement. ▲

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License.