Benjamin Vercellone (Missouri Department of Social Services), John Shelestak (Department of Biological Sciences, Kent State University), Yaser Dhaher (Department of Mathematics, Kent State University) and Robert Clements (Department of Biological Sciences, Kent State University)

Abstract

Vision enables a person to simultaneously perceive all parts of an object in its totality, relationships to other objects for scene navigation, identification and planning. These types of perceptual processes are especially important for comprehending scenes in gaming environments and virtual representations. While individuals with visual impairments may have reduced abilities to visualize objects using conventional sight, an increasing body of evidence indicates that compensatory neural mechanisms exist whereby tactile stimulation and additional senses can activate visual pathways to generate accurate representations. The current thrust to develop and deploy virtual and augmented reality systems capable of transporting users to different worlds has been associated with a push to include additional senses for even greater immersion. Clearly, the inclusion of these additional modalities will provide a method for individuals with visual impairments to enjoy and interact with the virtual worlds and content in general. Importantly, adoption and development of these methods will have a dramatic impact on inclusion in gaming and learning environments, and especially in relation to science, technology, engineering, and mathematical (STEM) education. While the development, standardization and implementation of these systems is still in the very early stages, gaming devices and open source tools are continuing to emerge that will accelerate the adoption and integration of the novel modes of computer interaction and provide new ways for individuals (with visual impairments) to experience digital content. Here we present the rationale and benefits for using this type of multimodal interaction for individuals with visual impairments as well as the current state of the art in haptic interfaces for gaming, education and the relationships to enhanced end user immersion and learning outcomes. As these technologies and devices are adopted and evolve, they are poised to have a dramatic impact on entertainment, education and quality of life of individuals with visual impairments.

Keywords: Haptic media, Special education, Special needs, Video games, Visual impairments.

Introduction

Successfully navigating and interacting with video game environments is naturally tied to the ability of the player to visually discern objects on the display and understand spatial relationships between these objects. As such, the realm of gaming has been heavily focused on increasing rendering quality to provide greater acuity and immersion for the user. This has undoubtedly pushed both the hardware and software forward, however, little of this development effort has been focused on how to convey in game elements without the use of vision, specifically for gamers who are visually impaired. Touch based methods have been classically used by the blind as a substitution for vision, with the most obvious example being the braille method of tactile writing whereby a system of raised bumps organized into six block cells, typically on embossed paper, are used to represent characters and punctuation. Invented by Louis Braille (Duxbury Systems, 2017) in the early 19th century this method has stood the test of time affirming the utility of touch, or haptic, sensations as an alternate method to convey visual concepts to the blind. In the realm of computer interaction for gaming and visualization of complex imagery, haptics have been integrated for many years via “rumble pads” and force-feedback devices providing users the ability to crudely physically sense digital events (Orozco, 2012). While these systems offer a level of multi-modal interaction for the sighted user, they have had limited use for seeing impaired individuals with most games for the visually impaired based around the use of sound for cue identification. However, tactile games for individuals with visual impairments based off popular WII console games (VI-bowling and VI-tennis) have been successfully developed using vibration to indicate ball timing, controller position and object placement (Tony, 2010). The crudeness of these cues and the difficulty in isolating them spatially limits the vibration only approach. It does affirm the principal and ability of using tactile feedback to accurately represent spatial concepts within the gaming realm. This would be especially true for haptic interfaces referenced to real world space with rapid refresh-rates that could provide much of the information that a sighted gamer would get visually.

While individuals with visual impairments may have reduced abilities to visualize objects using conventional sight, an increasing body of evidence indicates that compensatory mechanisms exist whereby tactile stimulation and additional senses can activate the visual system to generate accurate spatial representations. Indeed, reading braille is known to activate the visual cortex (Burton, 2006) of the brain in the blind (a region that typically codes visual information) and reflects the recoding of tactile sensations into “visual” signals. Studies also indicate that blind participants are typically able to better discriminate 3D shapes via tactile and haptic interaction (Norman, 2011) than sighted persons. Research evidence indicates the blind have different patterns of activation in the brain during haptic tasks (Roder, 2007) as well as during vibrotactile stimulation (Burton, 2004). Moreover, large-scale changes manifested in both structural and functional differences in brain connectivity indicate that the changes are not limited to the visual areas of the brain but reflect large changes in associated areas and “visual” perception as a whole. The idea of perceptual learning and substitution of sensory signals (where visual input is substituted for touch) can afford the ability of “sight” to subjects who are physically incapable of doing so. The remarkable capacity of the human brain to reorganize itself results in long lasting changes. This type of reorganization of visual pathways affirms the utility of a haptic approach to represent virtual environments to individuals with visual impairments. This will not only provide a means for additional brain reorganization but also that inherent differences in their brain structure suggests that perceptual learning will be more beneficial in this population with a dramatic impact on in game orientation and perception.

With the advent of new devices facilitating the generation of complex spatial and temporal representations of real world and virtual scenes, as well as the incorporation of tactile devices that allow physical interaction with computer generated imagery has emerged a novel platform for augmenting seeing impaired interfaces. Coupling this with evidence that individuals with visual impairments are able to acquire spatial knowledge using ancillary neural pathways via harnessing additional senses indicates that this is a promising and potentially game changing avenue to enhance user engagement and immersion. Here we detail the current state of haptic technologies as it relates to using these methods for encoding spatial information to individuals with visual impairments for both gaming and education. We begin with a discussion of the available hardware, and then we propose how these technologies could be used to convey in game information and learning as well as identify barriers and potential solutions. Finally, we address future developments and next steps for dealing with these innovations in the long term.

New Technologies at Stake

Haptic Media

Simple haptic feedback has been a part of gaming now for many years, but newer technologies are allowing for a larger range of haptic sensations across different digital experiences. Newer controllers, such as the Steam controller have improved on this feedback, allowing for a greater range of in-game information through finer control of the haptic output including haptic information on speed, boundaries, textures, and in-game actions (Steam Store, 2015). Microsoft is developing various haptic controllers to achieve various sensations such as texture, shear, variable stiffness, as well as touch and grasp, to enhance the level and variety of stimulation provided by a VR experience (Strasnick, 2018; Whitmire, 2018). Haptic styluses offer a different handheld experience via force feedback instead of vibrotactile feedback and can be used to interact with objects, or as a physical interface for more advanced simulations (Steinberg, 2007). Further, advances in wearable haptics; gloves, vests and full body suits have also expanded the potential experience of video games. Gloves allow for different actuators to be woven into the fabric or attached as an exoskeleton to achieve both vibro-tactile and force feedback (Virtual Motion Labs, 2018; VRGluv, 2017). The force feedback from the exoskeleton can define the edges of hard surfaces while vibrotactile actuators give information surface texture. In addition to exoskeleton-based force feedback, some gloves feature pneumatic actuators that can provide pressure directly to the skin, creating a realistic feeling of skin displacement.

Beyond gloves, the same technology is being applied to wearable vests and full body suits to create the most complete and immersive feel possible. Vests allow for targeted sensation to the body depending on where the stimulation is received in a game or VR environment (Kor-Fx, 2014; Hardlight VR, 2017; Woojer, 2018). Electrical nerve and muscle stimulation is also used for haptic feedback and also provides temperature control for changing virtual environments (Teslasuit, 2018). One practical problem with VR gaming is that moving around a space while wearing a headset can be difficult and dangerous. Haptic or omni-directional treadmills help fix this problem by anchoring the user to an area while still allowing for unfettered movement in any direction. More commonplace treadmills are also integrating haptic force feedback to give the sensation of moving through a real place while running on a treadmill (Nordicktrack, 2018). Indeed, a great deal of focus has also been spent on developing haptic touchscreens and incorporating this hardware into experiences for blind users. Newer haptic feedback seeks to alter the interaction between screens and fingers to create a more diverse sense of touch such as friction or texture simulation (Tanvas, 2018). Other developments include the manipulation of ultrasound to achieve tactile stimulation (Hap2U, 2018) with potential to create 3D surfaces in midair. Clearly as these new haptic technologies evolve and standardize the impact on immersive gaming and blind user interaction will be broad.

Visually-Impaired Specific Devices

A number of tactile hardware implementations specifically designed for individuals with visual impairments also exist. The Graphiti is tactile device consisting of an array equidistant pins with variable height and can be connected to a smartphone to control the height of the pins to represent text or camera images (Graphiti, 2018). The variable pin height could conceivably be used to represent limited information regarding the Z-axis, color, or whatever else someone may reasonably desire, such as an in game map. The system also offers a way to zoom in and out which is important from a tactile perspective, since human fingers cannot perceive and interpret information as minute and dense as the human eyes. The BrainPort V100 (Wicab, 2018) provides real world raw geometric information based on wearable camera input via a dongle inserted in the mouth that provides electro-tactile stimulation to the tongue. Bubble like patterns convey spatial information on the tongue and the user learns about shape, direction, relative distance, and size. BLITAB have successfully created a tactile braille tablet that utilizes “tixels” that dynamically rise above the surface of the display and can convert text to braille (Blitlab, 2018). The hardware also provides audio cues for the user but is limited in its ability to convey graphics, and objects in 3D space. BlindPAD offers a similar technology consisting of an array of electromagnetic “taxels” with 8mM pitch and a rapid refresh rate (Blindpad, 2018). The interactive technology is able to display graphics, maps and symbols as a tactile representation. This system has been successfully used in the education realm as well as can enhance users with visual impairments sense of space and their knowledge of unknown places. These latter points would be especially helpful if used for gaming to enhance blind users’ ability to navigate and investigate virtual environments and worlds.

While these systems would clearly be beneficial for reading braille and other 2D applications, it would appear spatial haptics currently in development for VR applications would be capable of creating 3D touch sensations closer to real world experiences. The enhancement in the variety of tactile stimulation available only deepens the experiences and the value these technologies can provide. It is clear that the development of the specific devices for individuals who are blind have been based around familiar technologies typically used to convey text; raised bumps on a flat surface. Nevertheless, creation of a common interface for these devices to video game environments or primitives (potentially by direct access to the graphics depth buffer) would create a new platform for both education and entertainment that would definitely empower its users.

Augmenting Visually-Impaired Gaming

This explosive development in hardware opens new avenues to convey spatial and game specific information in real time to gamers who have visual impairments. We have identified areas to specifically exploit these technologies to support in-game navigation, information extraction and user interaction. While some methods are specific to a certain device, the same principles would work at some scale using any haptic feedback from rumble pack in a controller to a fully-fledged VR vest.

- Navigation: Fundamental to almost all gaming experiences is the requirement to correctly navigate and understand virtual environments. Tactile maps, proximity cues and new in game tools could enhance blind gameplay.

-

- Tactile maps used by the blind have been shown to augment navigation when used during and prior to route traversal. However, the specific touch cues must be carefully considered when representing different types of information (roads, water, grass). Undoubtedly, a tactile approach to facilitate navigation of gaming environments would also offer similar benefits to the real-world case. It has been shown that the haptic approach when coupled with additional modalities such as audio can provide a method for individuals/gamers with visual impairments to generate cognitive maps of virtual environments using multimodal cues (Lahav, 2012), this is one of the major challenges for navigating gaming environments. Gaming environments (top down map) represented on a connected cell phone or other tactile tablet device (listed above) and interacted with via touch (vibration, tactile pixels) would provide an inexpensive solution. Here, haptic cues would orient the user using published frameworks for providing haptic access to 2D maps (Kostopoulos, 2007) to enhance knowledge and traversal of gaming environments.

- Proximity cues. As well as knowledge of entire gaming maps, information about discrete and proximal in game cues (walls, surfaces, barriers) is essential to spatial awareness, navigation and gameplay. Haptic outputs (within a vest for example)) at discrete regions over the body activated by cues extracted from the in-game display would rapidly provide feedback about in game surfaces. Using this strategy, a wall detected on the left side of the in-game player would activate a haptic sensor on the left side of the player. Haptic output modulation (such as vibration frequency and amplitude) would code for object distance and textures using proximity sensors as has been shown successful in physical applications (Keys, 2015).

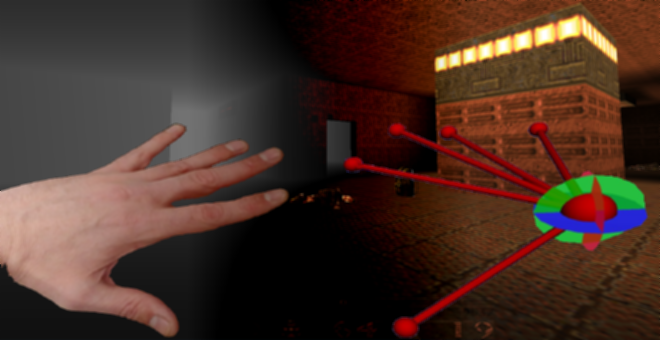

- New tools. Here we propose the development of new methods for users to probe spatial environments. Specifically creating methods for users to digitally explore regions of the virtual environment and providing targeted haptic feedback. Specific tools such as a haptic sonar, floor probe and virtual compass whereby the users can target specific areas of the screen and be provided feedback regarding structural elements and orientation. The proposed haptic sonar would probe a customizable portion of the environment and activate an output (a rumble pack for example) if an object is within the frustum of the probe. Floor probes would more specifically sample areas directly in front of the user (floor) within the game and indicate hazards (water, textures, holes) by varying haptic outputs to facilitate locomotion. Orientation is key to navigating any environment, and the creation of a haptic compass to identify gaze direction in the form of a necklace (or array of actuators around the body) where feedback rotates around the ring and indicates north. These three strategies have been shown to help blind navigation in real-life applications (Quest, 2018; Choiniere, 2017; Visell, 2009) suggesting they may beneficial for navigating virtual environments.

- In-Game Information: Layered upon game scene spatial information are characters, entities, game packs, enemies and other relevant dynamic game information. In order to provide a separate stream of information a distinct and generalized in game warning and information system is proposed. The information system should convey location, type of entity, and utility on a scale (good, indifferent, bad) to the user. Notifications would serve to provide spatial output to the user in the form of actuators at geographically separate points (around the body to indicate position for a vest or vibrator array for a glove). The nature of actuator output would convey the type of in-game entity at the specific location. For example, a health pack would initiate a series of short low intermittent vibration pulses but an enemy would cause fully maximal and continuous vibration at the actuator. Utilizing strength of haptic output to represent danger (good, bad, indifferent) and frequency to represent type of entity is a potentially viable solution.

- User Interaction: A major factor contributing to immersive gaming is the ability to interact with digital scenes and in-game characters. Different to navigation and in game cue identification, interaction typically includes feedback from the entity, such as dialog, a response (enemy fires) or change in the entity (health pack disappears). At least two types of interaction are possible, the first is scene object interaction (e.g. pick up a key or a health pack) and the second is dynamic entity interaction (engage an enemy, interface with a non-playing, or friendly, character). With the former, interaction is relatively passive and layering this information on top of the navigational cues above makes sense whereby a separate tone (or haptic output) indicates the passive entities response. However, when engaging enemies, or other characters, active interaction requires additional feedback and dynamic responses. Here, a “lock in” mode, whereby all haptic resources are dedicated to interacting with the dynamic entity would provide directed attention to the entity. Under this system, the in-game notification system would identify an entities utility to the user via haptic cues (very good or very bad) and the user can initiate interaction, or “lock in” all haptic resources, to track the object and map it to spatial actuators and convey entity specific information to the user (hostility, temporal changes, weapon activity). Once the user “un-couples” attention (or the character disappears from the area), the full array of scene probing once again is reestablished. This method is designed to “silence” inconsequential haptic information during times of critical engagement to increase clarity and resolution of the engagement.

Possible limitations

Many of the approaches above have been shown to benefit individuals with visual impairments interactions with real-world scenes and as such provide a potentially beneficial framework for virtual scenarios. However, caution must be taken when trying to implement such a layered and complex haptic system. The ability to multiplex different signals (using amplitude/frequency modulation as well as additional modalities) and silence less relevant information (via “locking in” attention and feedback) is key to the successful application of a system with a relatively limited set of physical outputs compared to inputs. Care should be taken to reduce overload to the user, potentially accomplished by using additional modalities to convey specific notifications or events (a bell to indicate a health pack). Haptic resolution (dynamic response and spatial constraints) is also a major factor that should be considered when localizing feedback systems on the body. It is known that our ability to discriminate between two points varies over position on the skin (Purves, 2001) as such placement of haptic actuators is of great importance. These numbers vary from a 5mm threshold on the thumb to 45 mm two-point discrimination threshold along the shoulder, suggesting that haptic placement as well as device capabilities should be considered when localizing haptic sources on the body.

Potential Learning Applications

Importantly, adoption and development of these methods will also have a dramatic impact on inclusion in gaming and learning environments, especially in relation to STEM education. Understanding spatial properties including shape, size, distance and orientation is foundational and essential to developing spatial thinking skills and understanding many topics including algebra, trigonometry, calculus, chemistry, physics, biology and higher mathematics. Typically, students use sight to internalize geometric and spatial properties, however, the students with visually impairments need to have a different mode of experiencing this. While the development, standardization and implementation of these systems is still in the very early stages, gaming devices and open source tools are continuing to emerge that will accelerate the adoption and integration of the novel modes of computer interaction and provide new ways for students to experience digital content. The use of tactile graphics which employ the sense of touch rather than vision to convey spatial properties have been used to deliver mathematics instruction (Brawand, 2016), biology (Reynaga-Pena, 2015), chemistry (Copolo, 1995) and physics (Holt, 2018). 3D printing technology is also a valid method for delivering science content (Grice, 2015; Kolitsky, 2014) to blind students but clearly suffers from an inability to modify representations that are typically static, with limited use in the gaming sphere. Tools are becoming to emerge that standardize the procedure of generating tactile graphical representations (Pather, 2014); however, more are clearly required to broaden adoption of these practices. While many of these early studies incorporated printed static models or embossed paper, with the explosion of hardware and software computer haptics are increasingly being used to convey spatial information using force feedback in real world space but further development of both platforms and content are integral to expanding. With support for vibration output on many tablet and cellphone devices it is one of the simplest ways to incorporate tactile response is via activating this output (Awada, 2013; Diagram Center, 2017) and successfully conveys mathematics concepts (Cayton-Hodges, 2012), graphics and additional STEM content (Hakkinen, 2013) but not without limitations (Klatzky, 2014). Most STEM based studies have relied on surface haptic approaches while less have incorporated spatial haptic feedback systems (Nikolakis, 2004; Lahav, 2012; Evett, 2009) but it is known to facilitate knowledge acquisition of 3-dimensional objects (Jones, 2005). By incorporating 3-dimensional space co-registered to the real world into the haptic workflow clearly provides a more fundamentally “real” experience. Further, it results in an explosive increase in the potential amount of information that can be conveyed to the user or gamer. It is clear that the use of additional senses can help provide cognitive representations of environments and spatial structures and as VR gaming technologies evolve it is clear they need to be incorporated into applications to augment seeing impaired learning in STEM.

Future Directions

Our lab and others are currently investigating new directions in haptic interaction for navigating physical and virtual environments as well as identifying geometric primitives using multimodal cues and triggers. Recent studies in our lab have been focused on evaluating tactile (touch) feedback for the instruction of students who are blind or visually impaired. Pilot studies using the GeoMagic device have affirmed the utility of the method where blind users are able to autonomously recognize designed representations and specific shapes in only a few seconds. This suggests a clear benefit for teaching geometry and basic mathematical principles, something we are currently exploring. Coupling this with the fact that our infrastructure can both represent dynamic objects that change over time and new models can be simply built or downloaded. Our excitement for the method is hampered by the fact that the technology does not simulate the physical sense of touch in a natural or indeed accessible way. We acknowledge that devices do exist that can simulate touch via spatial force feedback but cost and clearly prohibits the use and mass rollout of such devices. This is coupled with the fact that software is extremely limited and requires extensive programming knowledge to be extended. As such, using off the shelf technology we have also developed a hand tracking system with vibrational feedback for touching virtual objects. Using vibration motors attached to each fingertip the user is able to probe a virtual object in space and sense 3D objects (Dhaher, 2017). We intend to evaluate the technology and develop lessons for the students with visual impairments by deploying these instruments to learn foundational spatial concepts. In addition, we are actively developing interfaces for probing external and virtual environments with tactile feedback for navigation and virtual tele-presence applications. Using scene depth imaging and physical triggers located at strategic points on the body, users can continuously physically sense details regarding proximal objects and walls in the (virtual) environment. For example, vibration on the right side of the body indicates presence of an object in the right part of the environment. The use of more specialized “virtual” probes in development for scanning depth buffers and providing physical and multimodal feedback. Importantly, this thrust is toward the development of a common interface for navigating (gaming) environments using (virtual) environment probes with accompanied audio and touch based feedback. The end of goal of which is to create a method for probing running applications on the graphics processing unit (GPU) to map inputs and outputs to multi-modal cues (sound, vibration).

As is true for the integration of any technology portability, compatibility and easy end user access to functionality is critical to adoption. The current state of haptic development is in its early stages and as such relatively disparate with most projects based on creating individual applications rather than platforms for haptic integration into existing software. The development of end-user friendly middleware, or other programming interfaces, to provide a means to generalize the access of both non-specific software and output signals to any type of (haptic) device is critical. A number of platforms for haptic interaction and programming have emerged relatively recently but are still beyond the reach of most end users since they require programming software and detailed knowledge. Openhaptics Professional (3DSystems, 2018) is a commercially available programming interface that is designed to facilitate creation of a wide range of software incorporating haptic feedback and interaction. CHAI3D (Chai3D, 2018) is a similar open source project that is designed to be compatible with a large amount of existing hardware devices and incorporates the ability to extend support for custom or new hardware. The interface exists as a C++ simulation environment that supports a number of core functions for volume rendering, visualizing CAD files and beyond. Both interfaces suffer from the significant requirement of programming knowledge as well as each project is essentially its own specific application. A relatively mature open source library, the Virtual Reality Peripheral Network (Taylor, 2001), supported by the National Institutes of Health allows access to VR tracking devices as well as a limited number of hardware haptic implementations. VRPN aims to be a device-independent interface to virtual reality peripherals and provides a way for applications to communicate via the VRPN’s client-server architecture. The system provides an excellent way to interface hardware tracking, controllers and human interface devices. However, VRPN still requires specific modules for each hardware device as well as client module for the running software application. The Open Source Virtual Reality (OSVR, 2018) software development kit (SDK) allows developers to create applications with access to all supported VR headsets and controllers by including libraries during the creation of applications. This limits the scope of OSVR as well as denying end users with no programming knowledge the ability to effectively use the software.

A number of solutions have emerged that have solved some of these issues and allow running software applications to have no knowledge of the specific targeted hardware while still providing interaction. The open source project OpenVR (OpenVR, 2018) is a programming interface and runtime binary created by Valve (Valve, 2018) that permits many types of VR hardware (including haptic devices) to communicate with running applications. Importantly, the system is compatible with SteamVR (SteamVR, 2018) and can be installed from their portal but is still limited to compatible devices and games/applications available within the SteamVR portal. An older and apparently unmaintained interface does allow software agnostic access to arbitrary hardware. The Glovepie system uses an input/output mapping system to allow end users the ability to map arbitrary controller inputs to control running applications (GlovePie, 2010). It should be noted that none of the above libraries explicitly support devices created for the blind, an issue that clearly needs to be remedied to move seeing impaired gaming forward. Some progress has been made towards allowing any running game or application to have access to any piece of haptic hardware, but these implementations are not broad enough in scope (minimal application/hardware support) and potentially difficult to implement. In addition, the abstraction layers are designed for interaction using the inherent interface and methods built into the controlling software (for example, a device can only be used to control typical in game activities such running or jumping) rather than the ability to generally probe the games 3D environment and structure. To accomplish this it will likely be required to extract information from running applications at a lower level, for example via the graphic processing units’ (GPU) depth buffer. This could provide low level access for developed environmental “probes” or tools that are implemented as a simple installable binary providing (haptic) devices access to the display lists of the running application. These digital probes could then be simply translated into pertinent outputs for (blind) users that activates and supports both the hardware specifically designed for the seeing impaired as well as the next generation of VR haptic devices.

Conclusions

Here we present the rationale and benefits for using multimodal interaction for gamers with visual impairments as well as the current state of the art in haptic interfaces for gaming, education and the relationships to enhanced end user immersion and learning outcomes. As these technologies and devices are adopted and evolve they are poised to have a dramatic impact on the entertainment, education and quality of life of individuals with visual impairments. However, accessibility, software implementations and a common interface point would significantly help broaden the impact. As far as the latter, an underlying interface, or middleware library, built upon a standardized graphic libraries (such as OpenGL) that provides simple cross platform haptic access to depth buffers, geometric primitives and the virtual environment would simplify, enhance and expedite the incorporation of new methods of tactile computer interaction. More importantly, it would function with any running game without modification. It is clear that the human eye is able to acquire much more information temporally than the somatosensory system and touch based representations of color may be arbitrary and difficult to convey but haptic representations will clearly offer a much more natural interface for video games for individuals with visual impairments. The key will be harnessing next generation devices via a simple extraction layer to provide meaningful and compatible haptic outputs. The 2016 Disability Status report (Erickson, 2016) indicates that 2.4% (7,675,600 people) of non-institutionalized people in the US population reported a visual disability. In addition, it is estimated that there are 36 million blind persons worldwide with a further 217 million with moderate/severe impairment (Bourne, 2017) indicating that the development and integration of new haptic interfaces (both hardware and software) is warranted to support the entertainment and education of this population of potential gamers.

References

3Dsystems (2018). The OpenHaptics® Developer Edition. Retrieved from: https://www.3dsystems.com/haptics-devices/openhaptics

Awada, A., Issa, Y., B. Tekli, J., & Chbeir, R. (2013). Evaluation of touch screen vibration accessibility for blind users. In Proceedings of the 15th International ACM SIGACCESS Conference on Computers and Accessibility, p. 48.

Blindpad (2018). Blindpad (Tactile Tablet). Retrieved from https://www.blindpad.eu/

Blitab (2018). WORLD’S FIRST TACTILE TABLET. Retrieved from https://blitab.com/

Bourne, R. R. A., Flaxman, S. R., Braithwaite, T., Cicinelli, M.V, Das, A., & Jonas, J. B. (2017). Vision Loss Expert Group. Magnitude, temporal trends, and projections of the global prevalence of blindness and distance and near vision impairment: a systematic review and meta-analysis. Lancet Global Health, 5(9), pp. 888-997.

Brawand, A.C., & Johnson, N.M. (2016). Effective Methods for Delivering Mathematics Instruction to Students with Visual Impairments. Journal of Blindness Innovation and Research, 6(1).

Burton, H., McLaren, D.G., & Sinclair, R. J. (2006). Reading Embossed Capital Letters: An fMRI Study in Blind and Sighted Individuals. Human Brain Mapping, 27(4), pp. 325-339.

Burton, H., Sinclair, R.J., & McLaren, D. G. (2004). Cortical Activity to Vibrotactile Stimulation: An fMRI Study in Blind and Sighted Individuals. Human Brain Mapping, 23(4), pp. 210-228.

Cayton-Hodges, G. Marquez, L. van Rijn, P. Keehner, M. Laitusis, C. Zapata-Rivera, D. Bauer, M., & Hakkinen, M.T. (2012). Technology Enhanced Assessments in Mathematics and Beyond: Strengths, Challenges, and Future Directions. Paper presented at the Invitational Research Symposium on Technology Enhanced Assessments, Washington, DC.

Chai3D (2018). CHAI3D is a powerful cross-platform C++ simulation framework. Retrieved from http://www.chai3d.org/concept/about

Choinière, J. P., & Gosselin, C. (2017). Development and Experimental Validation of a Haptic Compass Based on Asymmetric Torque Stimuli. IEEE Transactions on Haptics, 10(1), pp. 29-39.

Copolo, C.E., & Hounshell, P. B. (1995). Using three-dimensional models to teach molecular structures in high school chemistry. Journal of Science Education and Technology, 4(4), pp. 295-305.

Dhaher, Y., & Clements, R. (2017). A Virtual Haptic Platform to Assist Seeing Impaired Learning: Proof of Concept. Journal of Blindness Innovation and Research, 7(2).

Diagram Center. (2017). Adding Haptic Feedback to HTML. Retrieved from http://diagramcenter.org/integrating-haptic-feedback.html

Duxbury Systems. (2017). Louis Braille and the Braille System. Retrieved from https://www.duxburysystems.com/braille.asp

Erickson, W., Lee, C., & von Schrader, S. (2016). Disability Status Report: United States. Ithaca, NY: Cornell University Yang-Tan Institute on Employment and Disability (YTI).

Evett, L., Battersby, S., Ridley, A., & Brown, D. J. (2009). An interface to virtual environments for people who are blind using Wii technology — mental models and navigation. Journal of Assistive Technologies, 3(2), pp. 30-39.

GlovePie. (2010). Control Games with Gestures, Speech, and Other Input Devices! Retrieved from https://sites.google.com/site/carlkenner/glovepie

Graphiti. (2018). Introducing Graphiti — A Revolution in Accessing Digital Tactile Graphics and More!

Retrieved from https://www.aph.org/graphiti/

Grice, N., Christian, C., Nota, A., & Greenfield, P. (2015). 3D Printing Technology: A Unique Way of Making Hubble Space Telescope Images Accessible to Non-Visual Learners. Journal of Blindness Innovation and Research, 5(1).

Hakkinen, M., Rice, J., Liimatainen, J., & Supalo, C. (2013). Tablet-based Haptic Feedback for STEM Content. Paper presented at the International Technology & Disabilities Conference, San Diego, CA.

Hap2U (2018). Piezo actuators generate ultrasonic vibrations on a glass screen and modify the friction of your finger. Retrieved from http://www.hap2u.net/technology/

Hardlightm VR (2017). Hardlight Suit. Retrieved from http://www.hardlightvr.com/

Holt,, M., Gillen, D., Cook, C., Miller, C.H., Nandlall, S.D., Setter, K., Supalo,, C., Thorman,, P., & Kane, S.A. (2018). Making Physics Courses Accessible for Blind Students: strategies for course administration, class meetings and course materials. Physics Education, arXiv:1710.08977.

Jones, M. G., Bokinsky, A., Tretter, T., & Negishi, A. (2005). A Comparison of Learning with Haptic and Visual Modalities, Haptics-e, The Electronic Journal of Haptics Research, 3(6).

Keyes, A., D’Souza, M., & Postula, A. (2015). Navigation for the blind using a wireless sensor haptic glove. Paper presented at the 4th Mediterranean Conference on Embedded Computing (MECO), Budva, Montenegro.

Klatzky, R. L., Giudice, N. A., Bennett, C. R., & Loomis, J. M. (2014). Touch-Screen Technology for the Dynamic Display of 2D Spatial Information Without Vision: Promise and Progress. Multisensory Research, 27, pp. 359-378.

Kolitsky, M. A. (2014). 3d printed tactile learning objects: Proof of concept. Journal of Blindness Innovation and Research, 4(1).

Kor-FX (2014). Immersive Gaming Vest. Retrieved from http://korfx.com/

Kostopoulos, K., Moustakas, K., Tzovaras, D. Nikolakis, G. Thillou, C., & Gosselin, B. (2007). Haptic Access to Conventional 2D Maps for the Visually Impaired. Springer International Journal on Multimodal User Interfaces, 1(2), pp. 13-19.

Lahav, O., Schloerb, D.W., Kumar, S., & Srinivasan, M.A. (2012). A Virtual Environment for People Who Are Blind – A Usability Study. Journal of assistive technologies, 6(1), pp. 38-52.

Nikolakis, G., Tzovaras, D., Moustakidis, S., & Strintzis, M.G. (2004). CyberGrasp and PHANTOM Integration: Enhanced Haptic Access for Visually Impaired Users. Paper presented at 9th Conference Speech and Computer Saint-Petersburg, Russia.

Nordictrack (2018). FreeStride Trainer Series. Retrieved from https://www.nordictrack.com/ellipticals

Norman, J.F., & Ashley, N. (2011). Blindness enhances tactile acuity and haptic 3-D shape discrimination. Attention, perception & psychophysics, 73(7), pp. 23-31.

OpenVR (2018). OpenVR is an API and runtime that allows access to VR hardware from multiple vendors without requiring that applications have specific knowledge of the hardware they are targeting. Retrieved from https://github.com/ValveSoftware/openvr

Orozco, M., Silva, J., El Saddik, A., & Petriu, E. (2012). The Role of Haptics in Games. In A. El Saddik (ed.), Haptics Rendering and Applications (pp. 217-234). London, United Kingdom: IntechOpen.

OSVR (2018). Open Source Virtual Reality. Retrieved from http://www.osvr.org/

Pather, A. B. (2014). The innovative use of vector-based tactile graphics design software to automate the production of raised-line tactile graphics in accordance with BANA’s newly adopted guidelines and standards for tactile graphics, 2010. Journal of Blindness Innovation and Research, 4(1).

Purves, D., Augustine, G.J., & Fitzpatrick, D. (2001). Neuroscience. Differences in Mechanosensory Discrimination Across the Body Surface. Sunderland, MA: Sinauer Associates.

Quest (2018). Tacit is a sonar-enabled glove that helps the blind to detect when objects are nearby. Retrieved from https://ww2.kqed.org/quest/2011/08/23/glove-with-sonar-helps-the-blind-navigate/

Reynaga-Peña, C. G. (2015). A Microscopic World at the Touch: Learning Biology with Novel 2.5D and 3D Tactile Models. Journal of Blindness Innovation and Research, 5(1).

Röder, B., Rösler, F., & Hennighausen, E. (2007). Different cortical activation patterns in blind and sighted humans during encoding and transformation of haptic images. Psychophysiology, 34(3), pp. 292-307.

Steam Store. (2015, Nov 10). Steam Controller. Retrieved from https://store.steampowered.com.

SteamVR. (2018). SteamVR Home: New Maps, Asset Packs, and More. Retrieved from https://steamcommunity.com/steamvr

Steinberg, A.D., Bashook P.G., Drummond, J., Ashrafi S., & Zefran M. (2007). Assessment of faculty perception of content validity of Periosim©, a haptic-3D virtual reality dental training simulator. Journal of Dental Education, 71(12), pp. 1574-1582.

Strasnick, E., Holtz, C., Ofek, E., Sinclair, M., & Benko, H. (2018). Demonstration of Haptic Links: Bimanual Haptics for Virtual Reality Using Variable Stiffness Actuation. In Proceedings of CHI 2018. ACM New York, NY, USA.

Tanvas. (2018). Rediscover Touch. Retrieved from https://tanvas.co/

Taylor, R. M., Hudson, T. C., Seeger, A., Weber, H., Juliano, J., & Helser, A. T. (2001). VRPN: a device-independent, network-transparent VR peripheral system. In VRST ’01 Proceedings of the ACM symposium on Virtual reality software and technology (pp. 55-61). New York, NY: ACM.

Teslasuit (2018). Ultimate tech in Smart Clothing. Retrieved from https://teslasuit.io/

Tony, M., Foley, J., Folmer, E. (2010).Vi-bowling: a tactile spatial exergame for individuals with visual impairments. In Proceedings of the 12th international ACM SIGACCESS conference on Computers and accessibility (pp. 179-186). New York, NY, ACM.

Valve (2018). We make games, Steam, and hardware. Join us. Retrieved from https://www.valvesoftware.com/en/

Virtual Motion Labs (2018). Wireless Finger and Hand Motion Capture. Retrieved from http://www.virtualmotionlabs.com/

Visell, Y., Law, A., Cooperstock, & J. R. (2009). Touch Is Everywhere: Floor Surfaces as Ambient Haptic Interfaces. IEEE Transactions on haptics, 2(3), pp. 148-159.

VRGluv (2017). Feeling Is Believing. Retrieved from https://vrgluv.com/

Whitmire, E., Benko, H., Holz, C., Ofek, E., & Sinclair, M. (2018). Demonstration of Haptic Revolver: Touch, Shear, Texture, and Shape Rendering on a VR Controller. In Proceedings of CHI 2018. ACM New York, NY, USA.

Wicab (2018). BRAINPORT TECHNOLOGIES – helping people with disabilities live a BETTER LIFE!, Retrieved from https://www.wicab.com/

Woojer (2018). Ready. Play. Feel. Retrieved from https://www.woojer.com/vest/

Author’s Info:

Benjamin Vercellone

Missouri Department of Social Services

benjamin.j.vercellone@dss.mo.gov

John Shelestak

Department of Biological Sciences, Kent State University

jsheles1@kent.edu

Yaser Dhaher

Department of Mathematics, Kent State University

ydhaher@kent.edu

Robert Clements

Department of Biological Sciences, Kent State University

rclement@kent.edu

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License.